BOOK OVERVIEW

Contents

Part I: Inference and Learning Machines

- A Statistical Machine Learning Framework

- Set Theory for Concept Modeling

- Formal Machine Learning Algorithms

Part II: Deterministic Learning Machines

- Linear Algebra for Machine Learning

- Matrix Calculus for Machine Learning

- Convergence of Time-Invariant Dynamical Systems

- Batch Learning Algorithm Convergence

Part III: Stochastic Learning Machines

- Random Vectors and Random Functions

- Stochastic Sequences

- Probability Models of Data Generation

- Monte Carlo Markov Chain Algorithm Convergence

- Adaptive Learning Algorithm Convergence

Part IV: Generalization Performance

- Statistical Learning Objective Function Design

- Simulation Methods for Evaluating Generalization

- Analytic Formulas for Evaluating Generalization

- Model Selection and Evaluation

Copyright 2019-2021 by Richard M. Golden. All rights reserved.

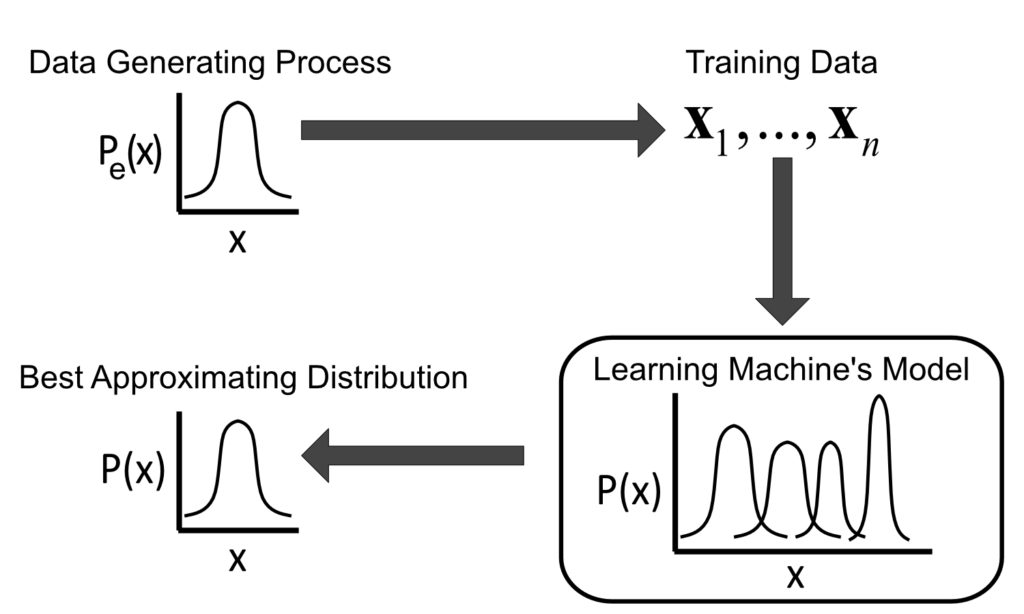

The statistical machine learning framework. The Data Generating Process (DGP) generates observable training data from the unobservable environmental probability distribution Pe. The learning machine observes the training data and uses its beliefs about the structure of the environmental probability distribution in order to construct a best-approximating distribution P of the environmental distribution Pe. The resulting best-approximating distribution of the environmental probability distribution supports decisions and behaviors by the learning machine for the purpose of improving its success when interacting with an environment characterized by uncertainty.

Figure 1.1 from Statistical Machine Learning: A unified framework by Richard M. Golden. Copyright 2021 by Richard M. Golden. Written permission from Richard M. Golden is required to download or use Figure 1.1.

Chapter by Chapter Overview Provided in Podcast LM101-078 (www.learningmachines101.com)